This repo provides a framework that can be used to seemlessly integrate LLM Distillation in your existing LLM pipelines. LLM distillation is the process of using small, less costly models in parallel to larger models to save on costs. Here is a great resource that provides in depth detail of the process.

As an example, the repo contains openai_distillation.py that uses this framework to distill OpenAI models. Here's how you can do it yourrself:

- First, add your API keys inside

/env. This repo uses Qdrant as an embeddings store - Extend the

LLMProviderinsidellm_services.pyto work with your LLM - Extend the

DatasetProviderinsidedataset_services.pyto format the collected data according to your LLM - Finally, extend the

FineTuningProviderinsidellm_services.pyto create relevant fine tuning jobs

- Each text generation request, and the generated response is collected and stored in an embeddings store

- A tag of

indexed: Falseis added to each newly created embedding

- Inside a scheduled job, each embedding with tag

indexed: Falseis collected - The data is formatter and is used to fine tune a small model

- Each embedding's tag is updated to

indexed: True

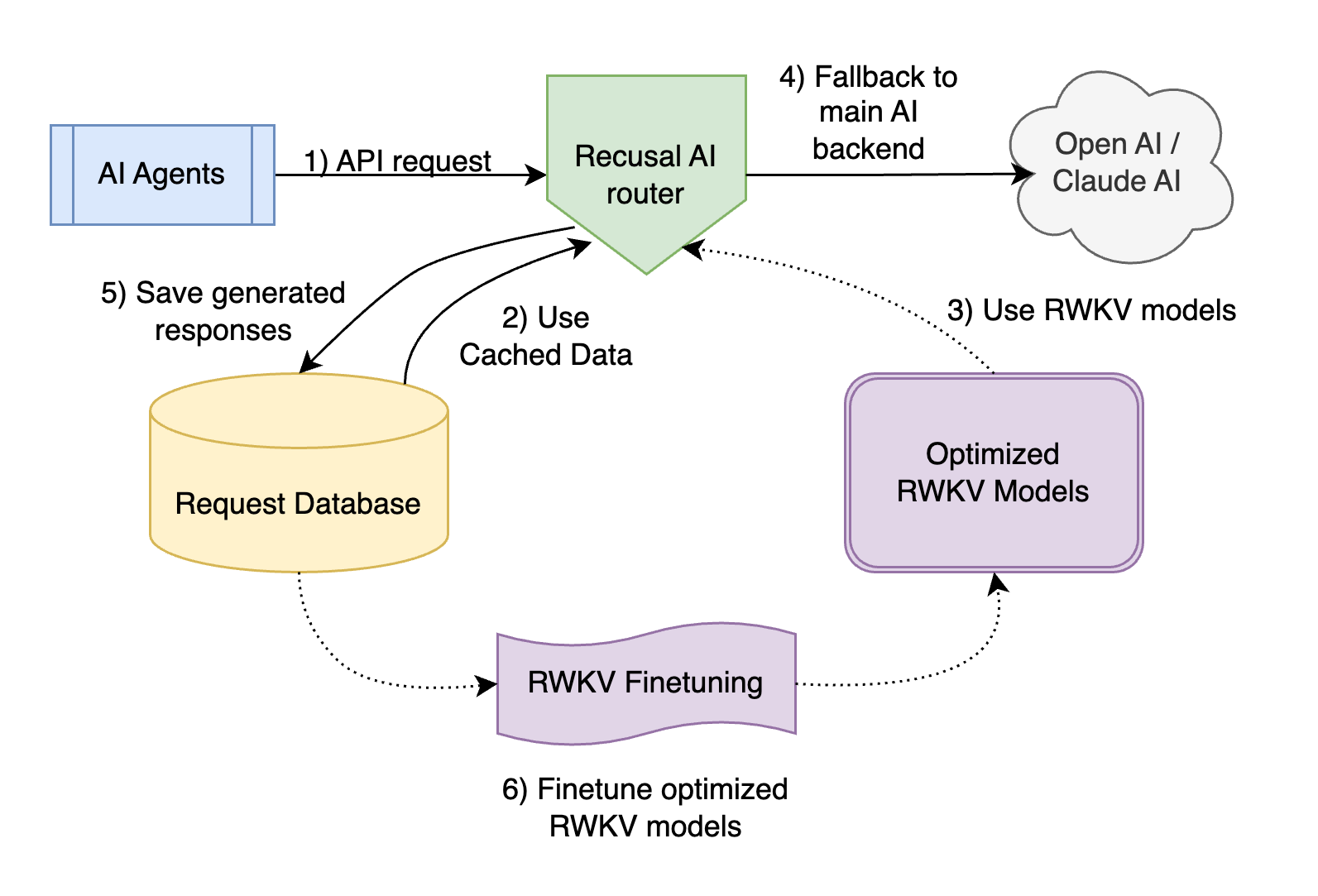

- On each request, an AI request router is used

- For the user's query, we search it inside the embeddings store

- If an entry above a certain similarity threshold and

indexed: Trueis found, the request is routed to the distilled model - Else, the request fallbacks to a main model (OpenAI, Mixtral...) and the data is collected to be fine tuned later

|

|---|

| Distillation architecture as proposed by Recursal.ai |

This technique was originally proposed by Recursal.ai in their 🏘️ Run over 120+ NPCs, in a tiny AI town with RWKV. This repo extracts, extends, and generalizes their approach to be used in production.