-

Notifications

You must be signed in to change notification settings - Fork 163

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Performance optimization: ReadableStreamDefaultReader.prototype.readMany

#1236

Comments

|

I've been considering the possibility of a new kind of reader that implements the const readable = new ReadableStream({ ... });

const reader = new ReadableStreamBodyReader(readable);

await reader.arrayBuffer();

// or

await reader.blob();

// or

await reader.text();The Now, this approach would necessarily consume the entire readable so if we wanted an alternative that would consume the stream incrementally, then this won't work, but the basic idea remains the same: introducing a new kind of Reader is the right way to accomplish this. |

Yeah, that makes sense.

This is nice. Only thought here is maybe

Thinking more on this today – I think this problem needs to be addressed in the controller more so than the reader. The problem is that the reader can't tell the controller what it wants to do because the controller starts doing work before the reader is acquired. For converting a stream to an array/arrayBuffer/text/blob, instead of creating a bunch of chunks and merging them at the end, it should just not create a bunch of chunks in the first place. For streaming an HTTP response body, the HTTP socket/client is much better equipped to efficiently chunk up input than ReactDOM. I think a new controller type would work be more effective, but |

|

I like that and I’ve already implemented specialized functions for each of

those cases, so it would make a lot of sense to just have a separate reader

Ideally, it would have a way to tell the WritableStream the format it wants

too

…On Tue, Jun 14, 2022 at 7:00 AM James M Snell ***@***.***> wrote:

I've been considering the possibility of a new kind of reader that

implements the Body mixin, which would provide opportunities for these

kinds of optimizations. e.g.

const readable = new ReadableStream({ ... });

const reader = new ReadableStreamBodyReader(readable);

await reader.arrayBuffer();

// or

await reader.blob();

// or

await reader.text();

The ReadableStreamBodyReader would provide opportunities to more

efficiently consume the ReadableStream without going through the typical

chunk-by-chunk slow path and would not require any changes to the existing

standard API surface.

—

Reply to this email directly, view it on GitHub

<#1236 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AAFNGS5GGQPMKMZURVORFOTVPCGADANCNFSM5YV6GSGA>

.

You are receiving this because you authored the thread.Message ID:

***@***.***>

|

Some sources enqueue many small chunks, but

ReadableStreamDefaultReader.prototype.readonly returns one chunk at a time.ReadableStreamDefaultReader.prototype.readhas non-trivial overhead (at least one tick per call)When the developer knows they want to read everything queued, it is faster to ask for all the values available directly instead of reading one value at a time.

I implemented this in Bun.

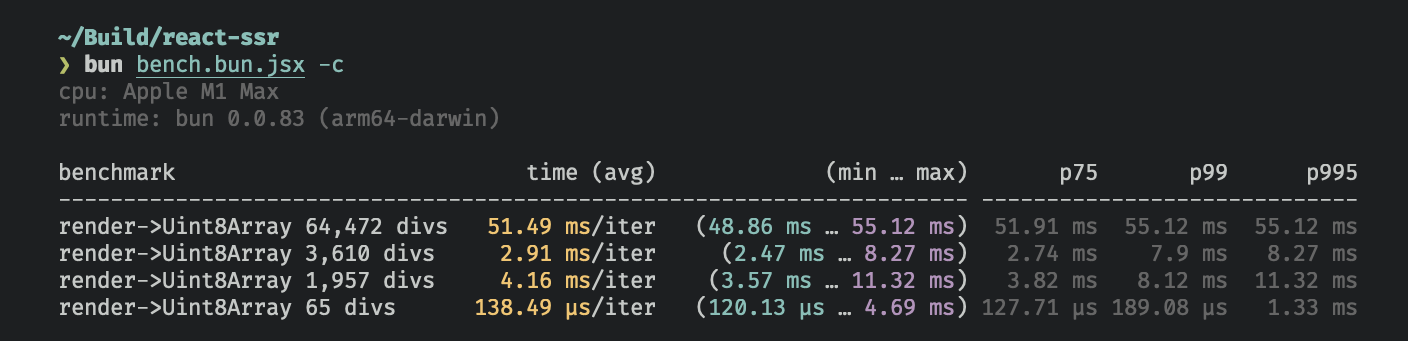

This led to a > 30% performance improvement to server-side rendering React via

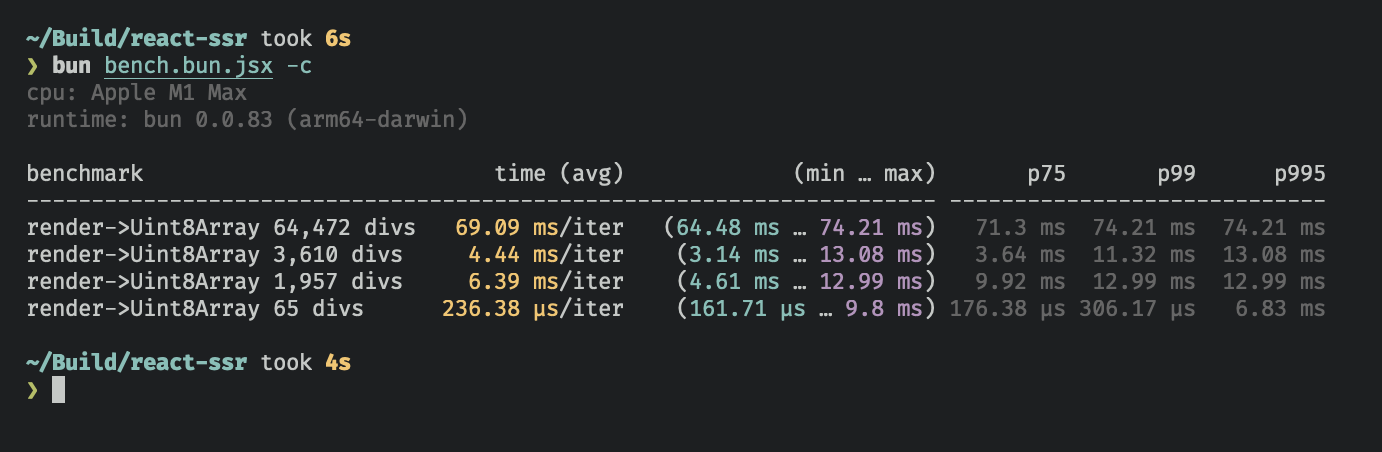

new Response(await renderToReadableStream(reactElement)).arrayBuffer()Before:

After:

Note: these numbers are out of date, bun's implementation is faster now - but the delta above can directly be attributed to

ReadableStreamDefaultReader.prototype.readManyHere is an example implementation that uses JavaScriptCore builtins

I have no opinion about the name of the function - just that some way to read all the queued values in one call should be exposed in the public interface for developers.

The text was updated successfully, but these errors were encountered: