-

-

- Green Field Tenancies +#### Using the Automation Toolkit via CLI + - - - +

-

+

- Before you Begin +

- Introduction to Jenkins with the toolkit +

- Create resources in OCI via Jenkins(Greenfield Workflow) +

- Must Read : Managing Network for Greenfield Workflow +

- Must Read : Provisioning of Resources - Instances/OKE/SDDC/Database +

- Must Read : Provisioning of multiple services together +

- Export Resources from OCI via Jenkins(Non-Greenfield Workflow) +

- Switch between CLI and Jenkins +

- Remote Management of Terraform State File

-

+

-

-

+

-### Learn More...

+### Videos

+

+### Known Behaviour

+### Learn More...

+

- Grouping of generated Terraform files

- OCI Resource Manager Upload -

- OPA For Compliance with Terraform

- Additional CIS Compliance Features -

- CD3 Validator Features - - +

- CD3 Validator Features

- Support for Additional Attributes -

- Automation Toolkit Learning Videos -

- Expected Behaviour Of Automation Toolkit -

- FAQs -

- Compliant ') html_file.write('

- Non-compliant ') html_file.write('

- Details ') html_file.write('

- Resources ') html_file.write('

- Compliant ') + html_file.write('

- Non-compliant ') + html_file.write('

- Details ') + html_file.write('

- Resources ') + html_file.write('

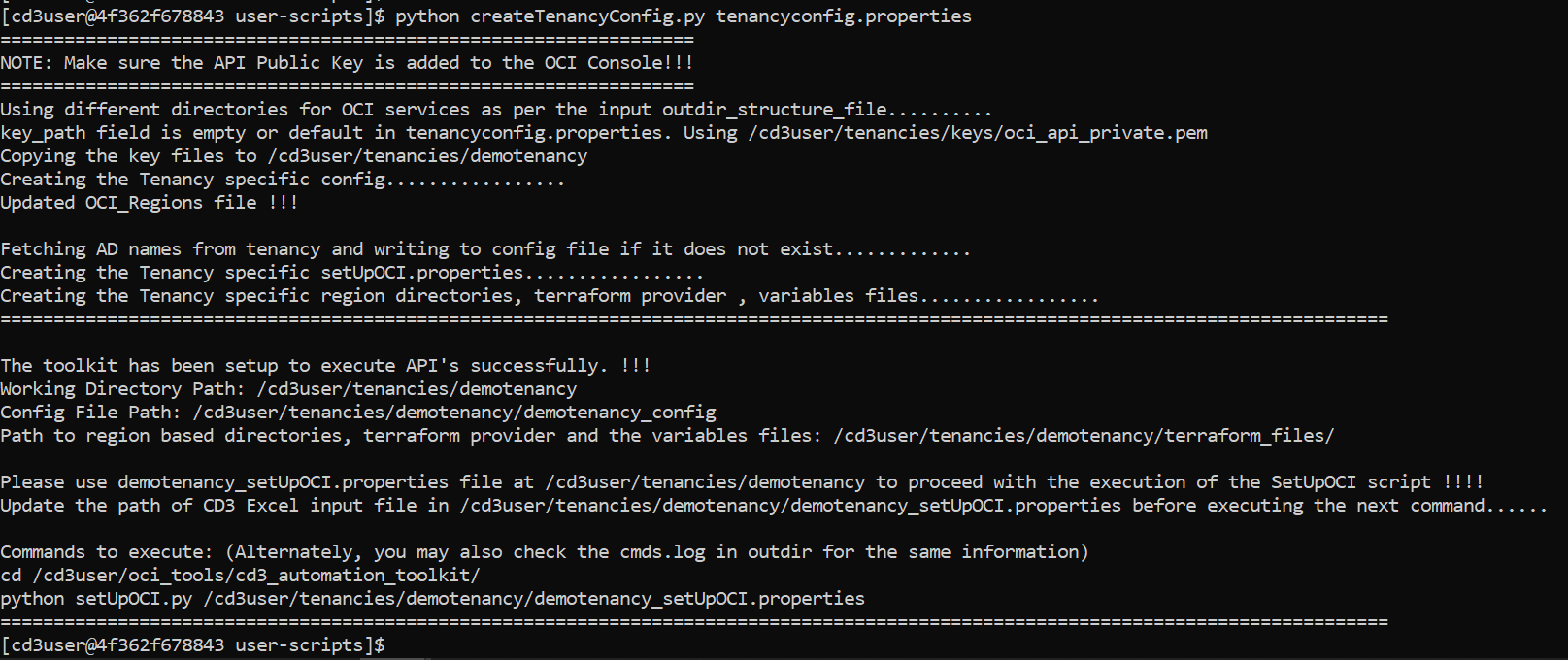

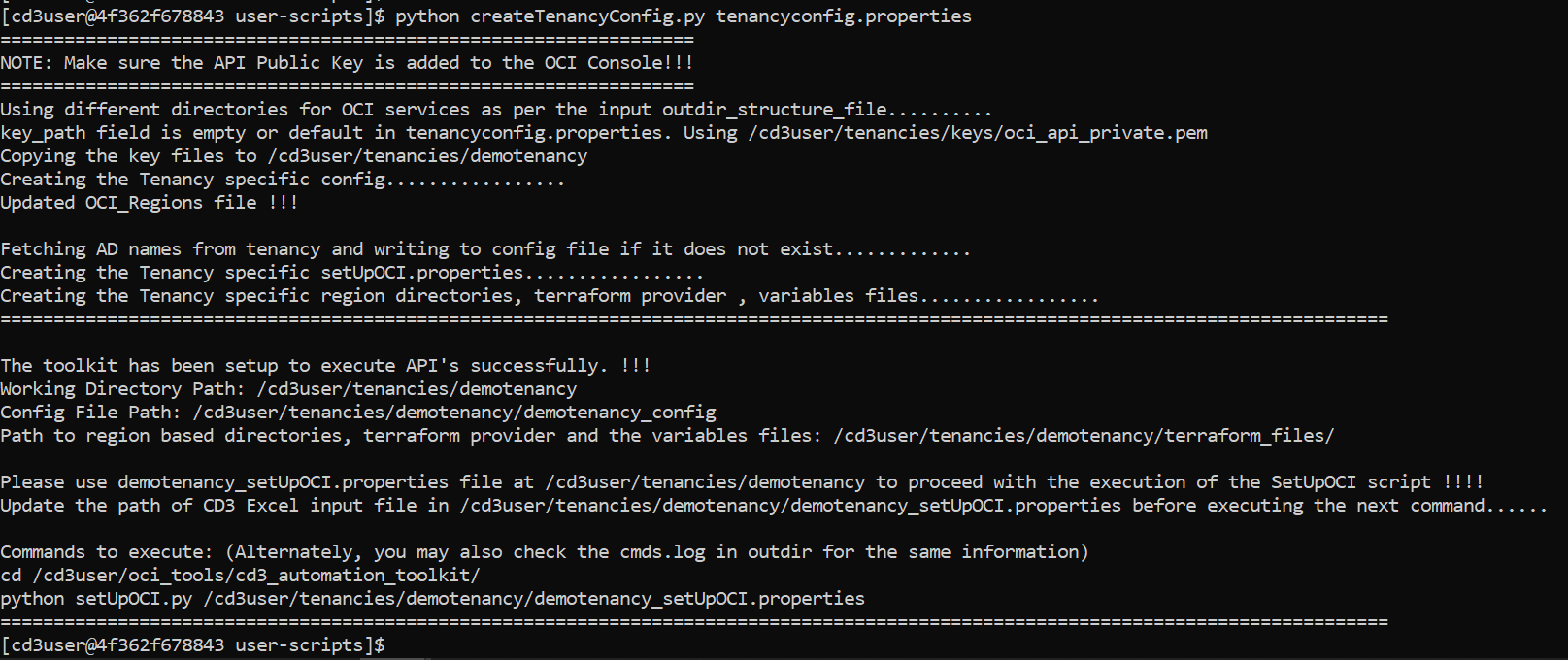

- Paste the contents of the PEM public key in the dialog box and click Add. - -### **Step 4 - Edit tenancyconfig.properties**: -Enter the details to **tenancyconfig.properties** file. Please make sure to review 'outdir_structure_file' parameter as per requirements. It is recommended to use seperate outdir structure in case the tenancy has large number of objects. -``` -[Default] -# Mandatory Fields -# Friendly name for the Customer Tenancy eg: demotenancy; -# The generated .auto.tfvars will be prefixed with this customer name -customer_name= -tenancy_ocid= -fingerprint= -user_ocid= - -# Path of API Private Key (PEM Key) File; If the PEM keys were generated by running createAPI.py, leave this field empty. -# Defaults to /cd3user/tenancies/keys/oci_api_private.pem when left empty. -key_path= - -# Region ; defaults to us-ashburn-1 when left empty. -region= -# The outdir_structure_file defines the grouping of the terraform auto.tf.vars for the various generated resources. -# To have all the files generated in the corresponding region, leave this variable blank. -# To group resources into different directories within each region - specify the absolute path to the file. -# The default file is specified below. You can make changes to the grouping in the below file to suit your deployment" -outdir_structure_file= -#or -#outdir_structure_file=/cd3user/oci_tools/cd3_automation_toolkit/user-scripts/outdir_structure_file.properties - -# Optional Fields -# SSH Key to launched instances -ssh_public_key= - -``` -### **Step 5 - Initialise the environment**: -Initialise your environment to use the Automation Toolkit. +### **Step 2 - Choose Authentication Mechanism for OCI SDK** +* Please click [here](/cd3_automation_toolkit/documentation/user_guide/Auth_Mechanisms_in_OCI.md) to configure any one of the available authentication mechanisms. + +### **Step 3 - Edit tenancyconfig.properties**: +* Run ```cd /cd3user/oci_tools/cd3_automation_toolkit/user-scripts/``` +* Fill the input parameters in **tenancyconfig.properties** file. +* Ensure to: + - Have the details ready for the Authentication mechanism you are planning to use. + - Use the same customer_name for a tenancy even if the script needs to be executed multiple times. + - Review **'outdir_structure_file'** parameter as per requirements. It is recommended to use seperate outdir structure to manage + a large number of resources.

- Overwrites the specific tabs of Excel sheet with the exported resource details from OCI. +

- Generates Terraform Configuration files - *.auto.tfvars. +

- Generates shell scripts with import commands - tf_import_commands_<resource>_nonGF.sh + +

- Executes shell scripts with import commands(tf_import_commands_<resource>_nonGF.sh) generated in the previous stage + +

- Respective pipelines will get triggered automatically from setUpOCI pipeline based on the services chosen for export. You could also trigger manually when required. +

- If 'Run Import Commands' stage was successful (ie tf_import_commands_<resource>_nonGF.sh ran successfully for all services chosen for export), respective terraform pipelines triggered should have 'Terraform Plan' stage show as 'No Changes' + +

-

+