Basic hypercerts evaluations/attestations #1259

Replies: 5 comments 5 replies

-

|

@holkeb could you provide an example for "3. V0: Users can create an evaluation based on a template pointing to a hypercert (or the same space in the public goods space)"? Is this basically any arbitraty evaluation format, but it would require the hypercertID field? |

Beta Was this translation helpful? Give feedback.

-

|

sidenote: Here's a bunch of impact metrics as outlined by LauNaMu: https://plaid-cement-e44.notion.site/e49fbb14180d451baf5e393bbd64f0f4?v=0fa6de802b8c431d81bcbc3c8f61e5ad |

Beta Was this translation helpful? Give feedback.

-

|

It sounds like 3 is the stage where we take a white glove approach to the evaluation schema and work together with OSO and other close collaborators to refine the schema thats generalizable. In step 4 we would programatize this flow and can give control to admins. Sometime between 3 and 4 is probably also the point where we can loop in other evaluation tools liks Deresy/gitcoinreviews.co to validate our approach. Looping them in would also set us up for 5-7. |

Beta Was this translation helpful? Give feedback.

-

|

For the sake of an itterative approach, I think we can specify 1 + 2 as the first itteration. We can make an evaluator app based on the starter app and start implementing EAS. When we have the EAS flow, we can start thinking about the non-EAS flow that mimics it (i.e. signed attestations on IPFS tracked by us). Note/idea: is attestations always flow through an 'adapter' contract (that could also function as a resolver) we have a central point of reference for all attestations. |

Beta Was this translation helpful? Give feedback.

-

|

To start great and detailed write up on what's needed to use attestations for evaluating impact. From the top down of the write up are my thoughts, questions and feedback. Use Cases

Examples1. V0: Users can attest a hypercert, i.e. confirms that the information is correct and that the contributors and minter are who they claim to be. Signs:

I think we should follow the EAS attestation structure which specifies an attester (organisation), recipient (hypercert) and then all other fields are custom. Also wondering what is the claimID and is that needed in the schema as I'm assuming rn it's the id generated upon making the attestation? Also can we create just one schema by just adding tags as an optional field? Proposed schema structure

Custom Fields

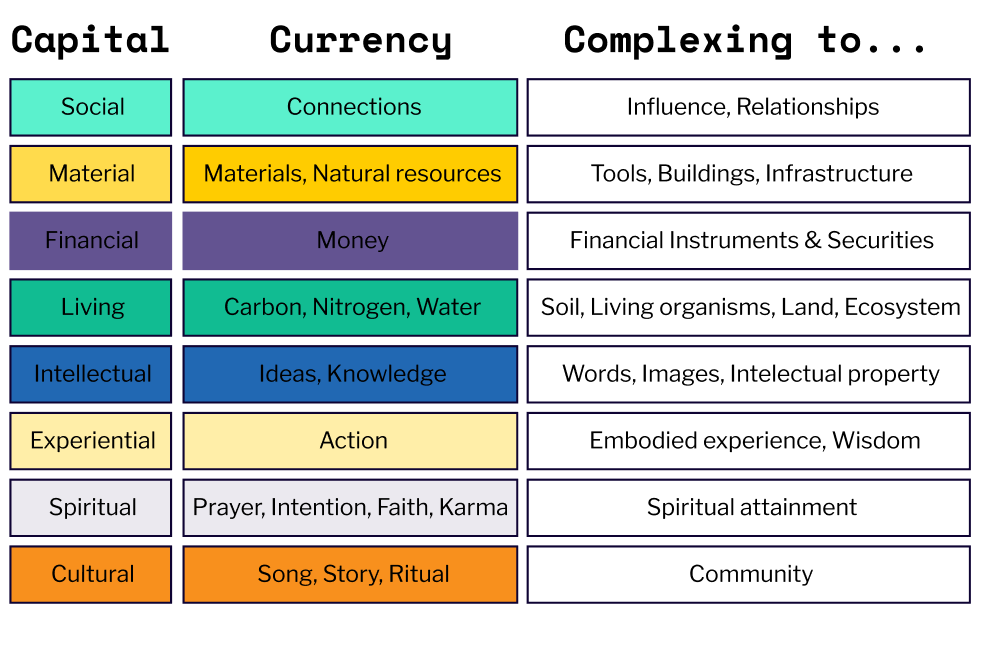

4. V0: Admin can create a template for evaluations This brings us to the issue of: "how to manage a bazaar of evaluations". How we we know which ones to support, and which ones to not support. A central registry works but may not be needed and different orgs like Gitcoin create their own registry of accepted evaluations schemas. Resolvers probably wouldn't work cause certain orgs may want their own custom resolver to do things like mint an NFT. I think a registry works and it's centralized to the org running the grant round and determining what evaluation schemas are accepted. To sum things up I think a registry should be at the org (Gitcoin) and/or grant round (GPN QF 19) level. QuestionsCan we structure/formalise the tagging and external URIs? If we start with a side-app we can implement checks on the frontend. - I think we can formalize link to have https, ipfs, etc. As for tags I think keeping it more open is probably best, maybe just defining casing for them. How do we support chains that don't have EAS? Upload a signed attestation to IPFS and store the link somewhere else? Maybe via a Poster event linking the claimID and the evaluation CID? - What are the relevant chains here and does EAS plan to support them? We can also deploy EAS on other chains in collaboration with EAS ideally. My current understanding is EAS is supported on any new OP chain by default when launched. The current OP chains that don't have it are Zora, PGN and maybe some others. Outside of OP there's Arbritrum and Polygon that seem relevant. Hopefully we don't need a workaround as EAS gains more adoption. How do we supports a multitude of evaluation schemas? - Touched upon above and expounding I think engraining the accepted list of evaluation schemas in the deployment and running of a grant round makes sense. So that upon launching a grant round you can specify the accepted schemas and even better different registries so you don't have to create your own if you trust Gitcoin's registry for example. I think structure provides the rigidity needed while also being flexible overtime and starting off on a more decentralized foot. ThoughtsI'm curious as touched upon in other responses about how we capture impact and action in a standardized way. When @bitbeckers and I talked we discussed the different forms of capital as the starting point for defining impact. What this could look like is one schema that has a field to describe the type of capital then subsequently the actions performed in other fields or multiple schemas for each capital and specific fields that are pertinent to that capital. I think using the capital at the attestation level makes sense rather than at the hypercerts. Reason being that hypercerts may cover multiple capitals and allowing attestation to label the capital helps to build a more clear picture/percentage of the different type of impact an hypercert has had. |

Beta Was this translation helpful? Give feedback.

-

The goal of this dev cycle is to integrate (EAS) evaluation in the hypercert ecosystem. The idea is to take a gradual, centralised approach to identify practical flows and refine the tooling available in the process.

From a roadmap perspective, this is a fundamental requirement before we start integrating with 3rd party evaluators/tools.

Relevant issues

Use cases

From the design doc:

1. V0: Users can attest a hypercert, i.e. confirms that the information is correct and that the contributors and minter are who they claim to be.

Example use case: Gitcoin attests a hypercert that was part of a round.

Signs:

In the GitCoin example:

[https://explorer.gitcoin.co/#/round/10/0xb5c0939a9bb0c404b028d402493b86d9998af55e, {contract: 0xb5c0939a9bb0c404b028d402493b86d9998af55e}]2. V0: Users can include in the attestation tags for a hypercert.

Example use case: Gitcoin attest a hypercert that was part of a round and labels the hypercert as part of that round.

Signs:

In the GitCoin example:

[https://explorer.gitcoin.co/#/round/10/0xb5c0939a9bb0c404b028d402493b86d9998af55e, {contract: 0xb5c0939a9bb0c404b028d402493b86d9998af55e}]3. V0: Users can create an evaluation based on a template pointing to a hypercert (or the same space in the public goods space)

Example use case: Open Source Observer creates an evaluation / measurement of metric of retroPGF or Gitcoin projects to their hypercerts

4. V0: Admin can create a template for evaluations

This brings us to the issue of: "how to manage a bazaar of evaluations". How we we know which ones to support, and which ones to not support.

Some solutions:

5. V0: Users can see attestations and evaluations on the view page of a hypercert

When we know the schema, we can find them using EAS. When we're flexible in the schemas we support, I think we should consider the 'simple contract' solution outlined under 4. This enables us to catch all evaluations pointing to a hypercert.

6. V0: Admin can specify an overview page that includes only hypercerts that received an attestation from a specific users.

Example use case: The marketplace only shows hypercerts that were attested by “close collaborators” (Gitcoin, Funding the Commons, Zuzalu, Hypercerts Foundation, Green Pill Network, …)

Hypercerts not attested can be viewed, but not by default

7. V0: Admin can specify an overview page that includes only hypercerts that received a specific label from a specific user

Example use case: An overview page includes all hypercerts that Gitcoin labeled as part of GG19.

Similar to 6, but add 'tags' to the filter

Implementation

Phase 1: Attestations with tags by close collaborators.

Contracts

For items 1 & 2 no contract work is needed. We can use the EAS infrastructure to design schemas and expose them in the SDK

Application

For simplicity and velocity we will create a simple evaluation app based on the starter app. This allows us to rapidly implement the EAS evaluation flow and identify best practice before we bring them into the main application.

Graph

EAS provides a GraphQL API so our graph can remain untouched.

Open questions

Beta Was this translation helpful? Give feedback.

All reactions