From 0f7716f9471df86e0275b8eee042f26cd10a20f2 Mon Sep 17 00:00:00 2001

From: Eli <43382407+eli64s@users.noreply.github.com>

Date: Tue, 1 Aug 2023 07:56:27 -0500

Subject: [PATCH] Update readme file

---

README.md | 597 +++++++++++++++++++++++++++++++++++-------------------

1 file changed, 384 insertions(+), 213 deletions(-)

diff --git a/README.md b/README.md

index 7084fc20..266a5281 100644

--- a/README.md

+++ b/README.md

@@ -1,305 +1,476 @@

-

---

-## 📒 Table of Contents

-- [📒 Table of Contents](#-table-of-contents)

+## 📖 Table of Contents

+

+- [📖 Table of Contents](#-table-of-contents)

- [📍 Overview](#-overview)

-- [⚙️ Features](#-features)

-- [📂 Project Structure](#project-structure)

-- [🧩 Modules](#modules)

+ - [🎯 *Motivation*](#-motivation)

+ - [⚠️ *Disclaimer*](#️-disclaimer)

+- [👾 Demo](#-demo)

+- [⚙️ Features](#️-features)

- [🚀 Getting Started](#-getting-started)

-- [🗺 Roadmap](#-roadmap)

+ - [✔️ Dependencies](#️-dependencies)

+ - [📂 Repository](#-repository)

+ - [🔐 OpenAI API](#-openai-api)

+ - [📦 Installation](#-installation)

+ - [🎮 Using *README-AI*](#-using-readme-ai)

+ - [🧪 Running Tests](#-running-tests)

+- [🛠 Future Development](#-future-development)

+- [📒 Changelog](#-changelog)

- [🤝 Contributing](#-contributing)

- [📄 License](#-license)

- [👏 Acknowledgments](#-acknowledgments)

---

-

## 📍 Overview

-The readme-ai project is a command line tool that generates high-quality README.md files for codebases using natural language processing and analysis of dependencies. It leverages the OpenAI API to generate text summaries, slogans, and overviews for the code. The tool also extracts file contents and dependencies from the codebase and provides options for customization. Its core value proposition lies in simplifying the process of creating informative and well-structured README files, thus enhancing the overall documentation and user experience for code repositories.

+*README-AI* is a powerful, user-friendly command-line tool that generates extensive README markdown documents for your software and data projects. By providing a remote repository URL or path to your codebase, this tool generates documentation for your entire project, leveraging the capabilities of large language models and OpenAI's GPT APIs.

+

+#### 🎯 *Motivation*

+

+Simplifies the process of writing and maintaining high-quality project documentation. My aim for this project is to provide all skill levels a tool that improves their technical workflow, in an efficient and user-friendly manner. Ultimately, the goal of *README-AI* is to improve the adoption and usability of open-source projects, enabling everyone to better understand and use open-source tools.

+#### ⚠️ *Disclaimer*

+

+*README-AI* is currently under development and has an opinionated configuration and setup. While this tool provides an excellent starting point for documentation, its important to review all text generated by the OpenAI API to ensure it accurately represents your codebase. Ensure all content in your repository is free of sensitive information before executing.

+

+Additionally, frequently monitor your API usage and costs by visiting the [OpenAI API Usage Dashboard](https://platform.openai.com/account/usage).

---

-## ⚙️ Features

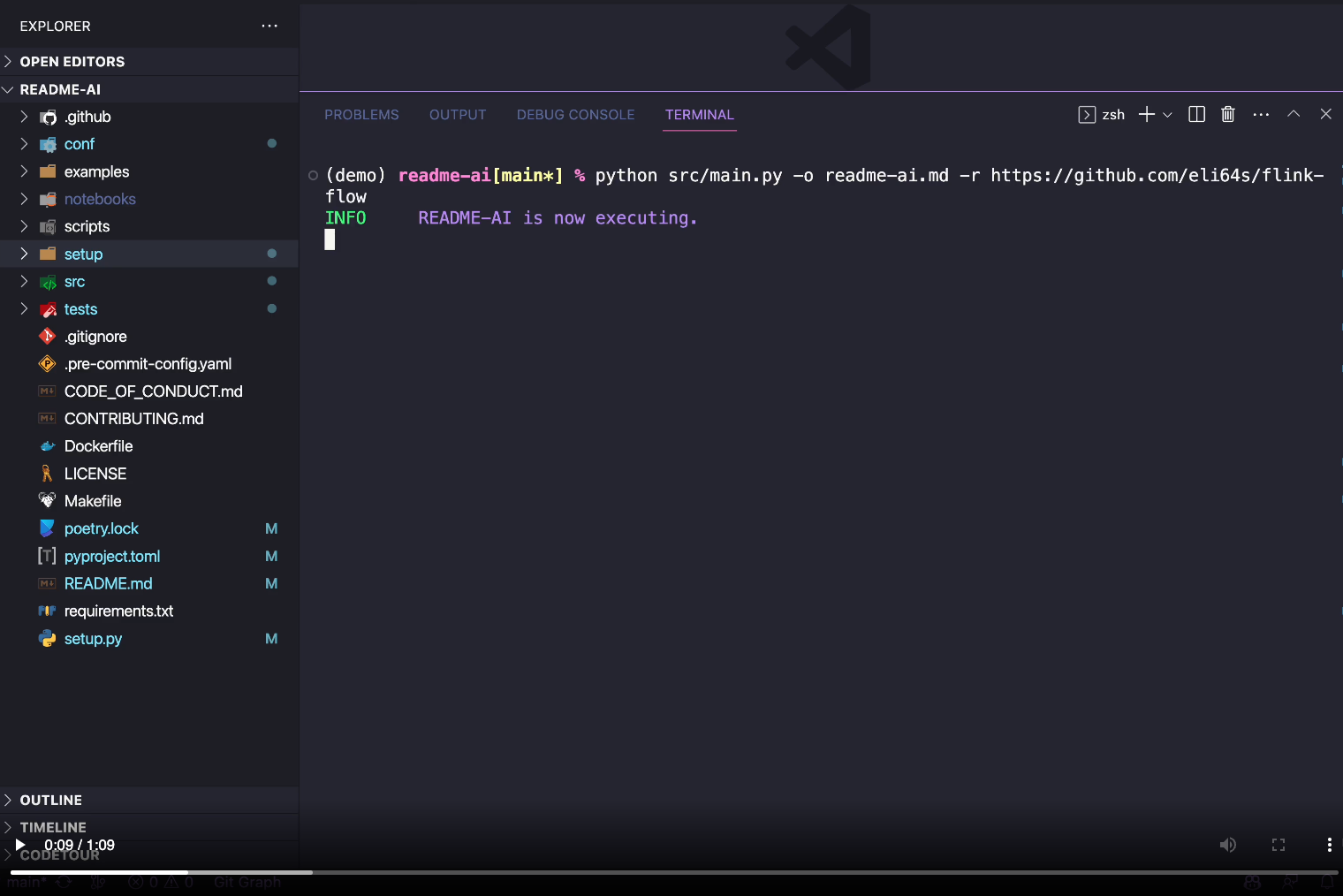

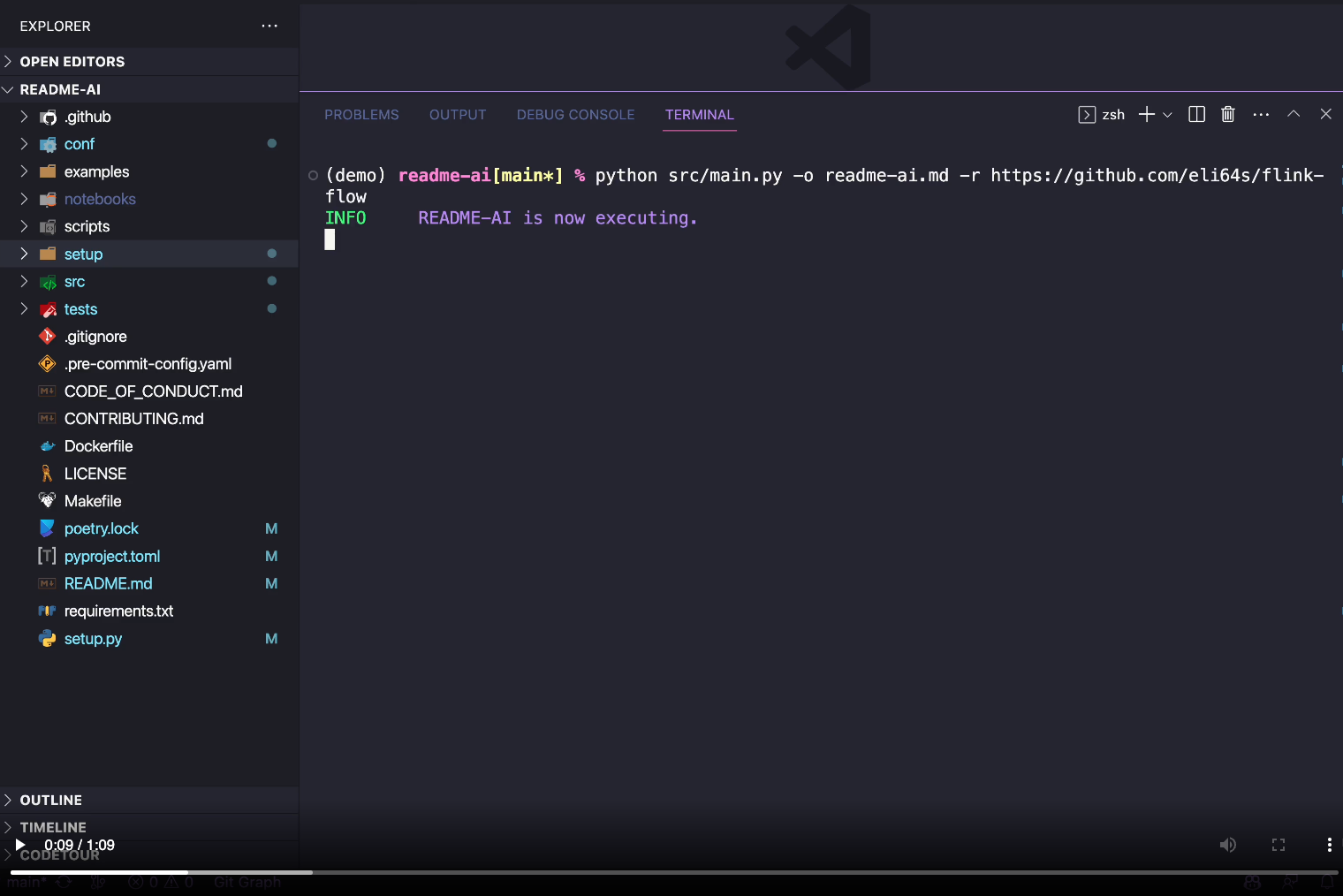

+## 👾 Demo

-Here is a comprehensive technical analysis of the Git codebase at https://github.com/eli64s/readme-ai:

+

+  +

-| Feature | Description |

-| ---------------------- | ------------------------------------- |

-| **⚙️ Architecture** | The system follows a modular architecture, dividing functionality into smaller components. Docker is used for containerization and a Makefile provides convenient management commands. The overall structure is well-organized and clear. |

-| **📖 Documentation** | The project includes documentation for each file and folder in the repository, providing relevant details about their purpose and usage. The documentation is concise, informative, and greatly contributes to the understandability of the system. |

-| **🔗 Dependencies** | The system relies on external libraries such as PyPI, OpenAI API, Streamlit, and Docker Buildx. Dependencies are managed using pip and conda. The use of these libraries enhances the functionality and efficiency of the system. |

-| **🧩 Modularity** | The codebase demonstrates a high level of modularity, with clear separation of concerns and the organization of functionality into separate files and directories. Modular components facilitate code reuse and maintainability. |

-| **✔️ Testing** | While there is no explicit mention of testing tools or strategies present in the repository, it is important to note the potential for testing these modular components. Testing the functionality and integration points, as well as the CLI tool, would enhance the reliability of the system. |

-| **⚡️ Performance** | The performance of the system, such as speed, efficiency, and resource usage, cannot be fully determined from the codebase alone. However, the use of Docker images and caching functionality indicates considerations for performance optimization. |

-| **🔐 Security** | The codebase does not reveal explicit security measures in place. Protecting against data breaches and maintaining functionality are important considerations. Security features should be implemented, such as validating user input, encrypting sensitive data, and utilizing appropriate access controls. |

-| **🔀 Version Control** | The codebase leverages Git for version control. Git enables collaborative development, code tracking, and managing changes between different versions efficiently. Using GitHub as the remote repository allows for easy collaboration and code review processes. |

-| **🔌 Integrations** | The system interacts with various external systems and services, such as GitHub, Docker registry, and the OpenAI API. These integrations provide added functionality for repository fetching, Docker image building, and natural language processing, respectively. |

-| **📶 Scalability** | While the system's scalability cannot be determined solely from the codebase, Docker containers provide a foundation for scalability. With proper container orchestration tools, the system can be horizontally scaled to handle increasing loads and increased demand over time. |

+

---

+## ⚙️ Features

-## 📂 Project Structure

-

-

-```bash

-repo

-├── CHANGELOG.md

-├── CODE_OF_CONDUCT.md

-├── CONTRIBUTING.md

-├── Dockerfile

-├── LICENSE

-├── Makefile

-├── Procfile

-├── README.md

-├── conf

-│ ├── conf.toml

-│ ├── dependency_files.toml

-│ ├── ignore_files.toml

-│ ├── language_names.toml

-│ ├── language_setup.toml

-│ └── svg

-│ ├── badges.json

-│ └── badges_compressed.json

-├── examples

-│ ├── imgs

-│ │ ├── closing.png

-│ │ ├── demo.png

-│ │ ├── features.png

-│ │ ├── getting_started.png

-│ │ ├── header.png

-│ │ ├── modules.png

-│ │ ├── overview.png

-│ │ └── tree.png

-│ ├── readme-energy-forecasting.md

-│ ├── readme-fastapi-redis.md

-│ ├── readme-go.md

-│ ├── readme-java.md

-│ ├── readme-javascript.md

-│ ├── readme-kotlin.md

-│ ├── readme-lanarky.md

-│ ├── readme-mlops.md

-│ ├── readme-pyflink.md

-│ ├── readme-python.md

-│ ├── readme-rust-c.md

-│ └── readme-typescript.md

-├── heroku.yml

-├── poetry.lock

-├── pyproject.toml

-├── readmeai

-│ ├── __init__.py

-│ ├── app

-│ │ ├── Dockerfile

-│ │ ├── __init__.py

-│ │ └── streamlit_app.py

-│ ├── builder.py

-│ ├── conf.py

-│ ├── factory.py

-│ ├── logger.py

-│ ├── main.py

-│ ├── model.py

-│ ├── parse.py

-│ ├── preprocess.py

-│ └── utils.py

-├── requirements.txt

-├── scripts

-│ ├── build_image.sh

-│ ├── clean.sh

-│ ├── run.sh

-│ ├── run_batch.sh

-│ └── test.sh

-├── setup

-│ ├── environment.yaml

-│ └── setup.sh

-└── tests

- ├── __init__.py

- ├── conftest.py

- ├── test_builder.py

- ├── test_conf.py

- ├── test_factory.py

- ├── test_logger.py

- ├── test_main.py

- ├── test_model.py

- ├── test_parse.py

- ├── test_preprocess.py

- └── test_utils.py

-

-10 directories, 70 files

-```

+

+

-| Feature | Description |

-| ---------------------- | ------------------------------------- |

-| **⚙️ Architecture** | The system follows a modular architecture, dividing functionality into smaller components. Docker is used for containerization and a Makefile provides convenient management commands. The overall structure is well-organized and clear. |

-| **📖 Documentation** | The project includes documentation for each file and folder in the repository, providing relevant details about their purpose and usage. The documentation is concise, informative, and greatly contributes to the understandability of the system. |

-| **🔗 Dependencies** | The system relies on external libraries such as PyPI, OpenAI API, Streamlit, and Docker Buildx. Dependencies are managed using pip and conda. The use of these libraries enhances the functionality and efficiency of the system. |

-| **🧩 Modularity** | The codebase demonstrates a high level of modularity, with clear separation of concerns and the organization of functionality into separate files and directories. Modular components facilitate code reuse and maintainability. |

-| **✔️ Testing** | While there is no explicit mention of testing tools or strategies present in the repository, it is important to note the potential for testing these modular components. Testing the functionality and integration points, as well as the CLI tool, would enhance the reliability of the system. |

-| **⚡️ Performance** | The performance of the system, such as speed, efficiency, and resource usage, cannot be fully determined from the codebase alone. However, the use of Docker images and caching functionality indicates considerations for performance optimization. |

-| **🔐 Security** | The codebase does not reveal explicit security measures in place. Protecting against data breaches and maintaining functionality are important considerations. Security features should be implemented, such as validating user input, encrypting sensitive data, and utilizing appropriate access controls. |

-| **🔀 Version Control** | The codebase leverages Git for version control. Git enables collaborative development, code tracking, and managing changes between different versions efficiently. Using GitHub as the remote repository allows for easy collaboration and code review processes. |

-| **🔌 Integrations** | The system interacts with various external systems and services, such as GitHub, Docker registry, and the OpenAI API. These integrations provide added functionality for repository fetching, Docker image building, and natural language processing, respectively. |

-| **📶 Scalability** | While the system's scalability cannot be determined solely from the codebase, Docker containers provide a foundation for scalability. With proper container orchestration tools, the system can be horizontally scaled to handle increasing loads and increased demand over time. |

+

---

+## ⚙️ Features

-## 📂 Project Structure

-

-

-```bash

-repo

-├── CHANGELOG.md

-├── CODE_OF_CONDUCT.md

-├── CONTRIBUTING.md

-├── Dockerfile

-├── LICENSE

-├── Makefile

-├── Procfile

-├── README.md

-├── conf

-│ ├── conf.toml

-│ ├── dependency_files.toml

-│ ├── ignore_files.toml

-│ ├── language_names.toml

-│ ├── language_setup.toml

-│ └── svg

-│ ├── badges.json

-│ └── badges_compressed.json

-├── examples

-│ ├── imgs

-│ │ ├── closing.png

-│ │ ├── demo.png

-│ │ ├── features.png

-│ │ ├── getting_started.png

-│ │ ├── header.png

-│ │ ├── modules.png

-│ │ ├── overview.png

-│ │ └── tree.png

-│ ├── readme-energy-forecasting.md

-│ ├── readme-fastapi-redis.md

-│ ├── readme-go.md

-│ ├── readme-java.md

-│ ├── readme-javascript.md

-│ ├── readme-kotlin.md

-│ ├── readme-lanarky.md

-│ ├── readme-mlops.md

-│ ├── readme-pyflink.md

-│ ├── readme-python.md

-│ ├── readme-rust-c.md

-│ └── readme-typescript.md

-├── heroku.yml

-├── poetry.lock

-├── pyproject.toml

-├── readmeai

-│ ├── __init__.py

-│ ├── app

-│ │ ├── Dockerfile

-│ │ ├── __init__.py

-│ │ └── streamlit_app.py

-│ ├── builder.py

-│ ├── conf.py

-│ ├── factory.py

-│ ├── logger.py

-│ ├── main.py

-│ ├── model.py

-│ ├── parse.py

-│ ├── preprocess.py

-│ └── utils.py

-├── requirements.txt

-├── scripts

-│ ├── build_image.sh

-│ ├── clean.sh

-│ ├── run.sh

-│ ├── run_batch.sh

-│ └── test.sh

-├── setup

-│ ├── environment.yaml

-│ └── setup.sh

-└── tests

- ├── __init__.py

- ├── conftest.py

- ├── test_builder.py

- ├── test_conf.py

- ├── test_factory.py

- ├── test_logger.py

- ├── test_main.py

- ├── test_model.py

- ├── test_parse.py

- ├── test_preprocess.py

- └── test_utils.py

-

-10 directories, 70 files

-```

+1.

👇

📑 Codebase Documentation

+

+

+

+ ◦ Repository File Summaries

+

+ - Code summaries are generated for each file via OpenAI's gpt-3.5-turbo engine.

+ - The File column in the markdown table contains a link to the file on GitHub.

+

+ |

+

+

+

+  +

+ |

+

+

+

+⒉

👇

🎖 Badges

+

+

+

+ ◦ Introduction, Badges, & Table of Contents

+

+ - The OpenAI API is prompted to create a 1-sentence phrase describing your project.

+ - Project dependencies and metadata are visualized using Shields.io badges.

+ - Badges are sorted by hex code, displayed from light to dark hues.

+

+ |

+

+

+

+  +

+ |

+

+

+

+⒊

👇

🧚 Prompted Text Generation

+

+

+

+ ◦ Features Table & Overview

+

+ - Detailed prompts are embedded with repository metadata and passed to the OpenAI API.

+

+ - Features table highlights various technical attributes of your codebase.

+

+ - Overview section describes your project's use case and applications.

+

+

+

+ |

+

+

+

+  +

+  +

+ |

+

+

+

+⒋

👇

🌲 Repository Tree

+

+

+

+  +

+ |

+

+

+

+⒌

👇

📦 Dynamic User Setup Guides

+

+

+

+ ◦ Installation, Usage, & Testing

+

+ - Generates instructions for installing, using, and testing your codebase.

+ - README-AI currently supports this feature for code written with:

+

+ -

+ Python, Rust, Go, C, Kotlin, Java, JavaScript, TypeScript.

+

+

+

+ |

+

+

+

+  +

+ |

+

+

+

+⒍

👇

👩💻 Contributing Guidelines & more!

+

+| |

+|-----------------------------------------------|

+|  |

+

+⒎

👇

💥 Example Files

+Markdown example files generated by the readme-ai app!

+

+

+ |

+ Example File |

+ Repository |

+ Language |

+ Bytes |

+

+

+ | 1️⃣ |

+ readme-python.md |

+ readme-ai |

+ Python |

+

+ 19,839

+ |

+

+

+ | 2️⃣ |

+ readme-typescript.md |

+ chatgpt-app-react-typescript |

+ TypeScript, React |

+ 988 |

+

+

+ | 3️⃣ |

+ readme-javascript.md |

+ assistant-chat-gpt-javascript |

+ JavaScript, React |

+ 212 |

+

+

+ | 4️⃣ |

+ readme-kotlin.md |

+ file.io-android-client |

+ Kotlin, Java, Android |

+ 113,649 |

+

+

+ | 5️⃣ |

+ readme-rust-c.md |

+ rust-c-app |

+ C, Rust |

+ 72 |

+

+

+ | 6️⃣ |

+ readme-go.md |

+ go-docker-app |

+ Go |

+ 41 |

+

+

+ | 7️⃣ |

+ readme-java.md |

+ java-minimal-todo |

+ Java |

+ 17,725 |

+

+

+ | 8️⃣ |

+ readme-fastapi-redis.md |

+ async-ml-inference |

+ Python, FastAPI, Redis |

+ 355 |

+

+

+ | 9️⃣ |

+ readme-mlops.md |

+ mlops-course |

+ Python, Jupyter |

+ 8,524 |

+

+

+ | 🔟 |

+ readme-pyflink.md |

+ flink-flow |

+ PyFlink |

+ 32 |

+

+

+

+⒏

👇

📜 Custom README templates coming soon!

+Developing a feature that allows users to select from a variety of README formats and styles.

+Custom templates will be tailored for use-cases such as data, ai & ml, research, minimal, and more!

+

+

+ 🔝 Return

+

---

-## 🧩 Modules

-

-Root

+## 🚀 Getting Started

-| File | Summary |

-| --- | --- |

-| [Dockerfile](https://github.com/eli64s/readme-ai/blob/main/Dockerfile) | The code snippet creates a Docker image with Python 3.9 and installs system dependencies like git and tree. It sets up a non-root user, adds it to a specific group, and sets the working directory permissions accordingly. It installs a specific version of the "readmeai" package from PyPI using pip. Finally, it sets the command to run the "readmeai" CLI tool when the Docker image is run. |

-| [Makefile](https://github.com/eli64s/readme-ai/blob/main/Makefile) | This Makefile provides commands for managing the project. It includes functions for cleaning files, executing style formatting, creating conda and virtual environments, and fixing git untracked files. |

-| [Procfile](https://github.com/eli64s/readme-ai/blob/main/Procfile) | The provided code snippet runs a Streamlit application by executing the `streamlit run` command with the `streamlit_app.py` file as an argument. This allows users to interact with the web application and explore its features. |

+### ✔️ Dependencies

-Setup

+- Python 3.9, 3.10, 3.11

+- Pip, Poetry, Conda, or Docker (see installation methods below)

+- OpenAI API account and api key (see setup instructions below)

-| File | Summary |

-| --- | --- |

-| [setup.sh](https://github.com/eli64s/readme-ai/blob/main/setup/setup.sh) | This code snippet is a bash script that performs the setup for a conda environment named "readmeai." It ensures that the "tree" command is installed, checks for the presence of Git and Conda, verifies the Python version, creates the "readmeai" environment if necessary, installs required packages using pip, and activates the environment. |

+#### 📂 Repository

-Scripts

+```toml

+[git]

+repository = "Insert your repository URL or local path here!"

+```

-| File | Summary |

-| --- | --- |

-| [run_batch.sh](https://github.com/eli64s/readme-ai/blob/main/scripts/run_batch.sh) | The provided code snippet is a bash script that fetches repositories from GitHub and runs a Python script to generate a Markdown file with the repository's README content. It iterates over a list of repository URLs, extracts the repository name, and calls the Python script with the appropriate parameters. |

-| [build_image.sh](https://github.com/eli64s/readme-ai/blob/main/scripts/build_image.sh) | The code snippet builds a Docker image named "readmeai-app" with version "latest". It uses Docker Buildx to create a build environment, pulls the necessary dependencies, and builds the image for linux/amd64 and linux/arm64 platforms. Finally, it pushes the image to a Docker registry. |

-| [run.sh](https://github.com/eli64s/readme-ai/blob/main/scripts/run.sh) | This Bash code snippet activates a specified conda environment called readmeai and runs a Python script called main.py within that environment. Setting and exporting environment variables could also be performed before activating the conda environment. |

-| [clean.sh](https://github.com/eli64s/readme-ai/blob/main/scripts/clean.sh) | This script removes unnecessary files and directories, such as backup files, Python cache files, cache directories, VS Code settings, build artifacts, pytest cache, benchmarks, and specific files like CSV data and log files. |

-| [test.sh](https://github.com/eli64s/readme-ai/blob/main/scripts/test.sh) | The code snippet activates a conda environment, sets directories to include and exclude in a coverage report, runs pytest with coverage, generates and displays the coverage report, and removes temporary files and folders. |

+#### 🔐 OpenAI API

-Readmeai

+OpenAI API User Guide

-| File | Summary |

-| --- | --- |

-| [preprocess.py](https://github.com/eli64s/readme-ai/blob/main/readmeai/preprocess.py) | This code snippet handles the preprocessing of a codebase. It provides functions for analyzing a local or remote git repository, extracting file contents and dependencies, tokenizing content, and mapping file extensions to programming languages. |

-| [conf.py](https://github.com/eli64s/readme-ai/blob/main/readmeai/conf.py) | The provided code snippet defines multiple Pydantic models and data classes for handling configuration constants related to an application. It includes models for OpenAI API configuration, Git repository configuration, Markdown configuration, paths to configuration files, LLM prompts configuration, and the overall application configuration. The code also includes helper classes for loading and handling the configuration data. |

-| [logger.py](https://github.com/eli64s/readme-ai/blob/main/readmeai/logger.py) | This code snippet defines a custom logger class that configures and handles logging using the `logging` module. It provides methods to log messages at different levels (info, debug, warning, error, critical) and allows logging at a specified level using the `log` method. The logger class uses the `colorlog` library to format and display log messages in different colors based on their level. |

-| [factory.py](https://github.com/eli64s/readme-ai/blob/main/readmeai/factory.py) | This code snippet provides a FileHandler class that acts as a factory for reading and writing different file formats like JSON, Markdown, TOML, TXT, and YAML. It utilizes specific methods for each file format and handles exceptions related to file I/O operations. Additionally, it includes caching functionality to improve performance by storing previously read file contents in memory. |

-| [model.py](https://github.com/eli64s/readme-ai/blob/main/readmeai/model.py) | The provided code snippet is an OpenAI API handler that generates text for the README.md file. It includes functions to convert code to natural language text and chat to generate text using prompts. It handles API requests, rate limits, and caching. The code provides error handling and includes retry logic for failed API requests. The handler also has the ability to close the HTTP client. |

-| [builder.py](https://github.com/eli64s/readme-ai/blob/main/readmeai/builder.py) | The provided code snippet is a function named `build_markdown_file` that generates a README Markdown file for a codebase. It takes in app configuration, a config helper, a list of packages, and summaries as inputs. The function then creates different sections of the README file using helper functions. It generates badges, creates tables with code summaries, generates a directory tree, and creates the "Getting Started" section. Finally, it writes the README file and logs the file path. |

-| [utils.py](https://github.com/eli64s/readme-ai/blob/main/readmeai/utils.py) | This code snippet includes various utility methods for the readme-ai application. It provides functions for cloning repositories, extracting GitHub file links, manipulating text strings, validating files and URLs, and performing other useful operations like flattening nested lists. Overall, these functions contribute to the core functionalities of the application, facilitating various operations related to GitHub repositories and text manipulation. |

-| [parse.py](https://github.com/eli64s/readme-ai/blob/main/readmeai/parse.py) | The provided code snippet implements various methods to parse different types of files and extract their dependencies. It supports parsing of files such as Docker Compose, conda environment files, Pipfiles, Pipfile.lock, pyproject.toml, requirements.txt, Cargo.toml, Cargo.lock, package.json, yarn.lock, package-lock.json, go.mod, build.gradle, pom.xml, CMakeLists.txt, configure.ac, and Makefile.am. The extracted dependencies are returned as a list. |

-| [main.py](https://github.com/eli64s/readme-ai/blob/main/readmeai/main.py) | This code snippet is for a command line interface (CLI) tool called README-AI. It takes in a repository URL or directory path, generates a README file based on the code in the repository, and outputs the generated README file to a specified path. The tool uses the OpenAI API for natural language processing tasks, such as generating code summaries, slogans, overviews, and features. It also extracts dependencies and file text from the repository for analysis. |

+1. Go to the [OpenAI website](https://platform.openai.com/).

+2. Click the "Sign up for free" button.

+3. Fill out the registration form with your information and agree to the terms of service.

+4. Once logged in, click on the "API" tab.

+5. Follow the instructions to create a new API key.

+6. Copy the API key and keep it in a secure place.

-App

+> **⚠️ Note**

+>

+> - To maximize your experience with README-AI, it is recommended to set up a payment method on OpenAI's website. By doing so, you gain access to more powerful language models like gpt-3.5-turbo. Without a payment method, your usage will be restricted to the base gpt-3 models. This limitation might lead to less precise README files or potential errors during the generation process.

+>

+> - When using a payment method, make sure you have sufficient credits to run the README-AI application. Additionally, remember to regularly monitor your API usage and costs by visiting the [OpenAI API Usage Dashboard](https://platform.openai.com/account/usage). Please note that this API is not free and you will be charged for each request made, which can accumulate rapidly.

+>

+> - The generation of the README.md file should typically complete in under 1 minute. If it takes longer than a few minutes, please terminate the process.

+>

-| File | Summary |

-| --- | --- |

-| [Dockerfile](https://github.com/eli64s/readme-ai/blob/main/readmeai/app/Dockerfile) | The provided code snippet builds a Docker image for a Python application. It sets up the environment, installs system dependencies, creates a non-root user, installs Python dependencies (including readmeai and streamlit), exposes port 8501, and runs the Streamlit server. |

-| [streamlit_app.py](https://github.com/eli64s/readme-ai/blob/main/readmeai/app/streamlit_app.py) | This code snippet is a Streamlit app for README-AI. It allows users to generate a README.md file for their repository by running the README-AI command-line tool. The app takes inputs for the OpenAI API key, output file path, and repository URL or path. It then triggers the generation process and displays the generated README.md with a download button if successful. Progress is shown while generating the README. Overall, it provides a user-friendly interface for utilizing the README-AI tool. |

+---

-

+ 🔝 Return

+

+

+---

+

+## 🛠 Future Development

+

+- [X] Add additional language support for populating the *installation*, *usage*, and *test* README sections.

+- [X] Publish the *readme-ai* CLI app to PyPI [readmeai](https://pypi.org/project/readmeai/).

+- [ ] Create user interface and serve the *readme-ai* app via streamlit.

+- [ ] Design and implement a variety of README template formats for different use-cases.

+- [ ] Add support for writing the README in any language (i.e. CN, ES, FR, JA, KO, RU).

+

+---

+

+## 📒 Changelog

+

+[Changelog](./CHANGELOG.md)

+

+---

+

+## 🤝 Contributing

+

+[Contributing Guidelines](./CONTRIBUTING.md)

---

## 📄 License

-This project is licensed under the `ℹ️ INSERT-LICENSE-TYPE` License. See the [LICENSE](https://docs.github.com/en/communities/setting-up-your-project-for-healthy-contributions/adding-a-license-to-a-repository) file for additional info.

+[MIT](./LICENSE)

---

## 👏 Acknowledgments

-> - `ℹ️ List any resources, contributors, inspiration, etc.`

+*Badges*

+ - [Shields.io](https://shields.io/)

+ - [Aveek-Saha/GitHub-Profile-Badges](https://github.com/Aveek-Saha/GitHub-Profile-Badges)

+ - [Ileriayo/Markdown-Badges](https://github.com/Ileriayo/markdown-badges)

+

+

+ 🔝 Return

+

---

-

-

-

- -

- -

- +

+  +

+  +

+

+

+

+

+

+

+  +

+

+

+

+

+  +

+

+

+

+

-| Feature | Description |

-| ---------------------- | ------------------------------------- |

-| **⚙️ Architecture** | The system follows a modular architecture, dividing functionality into smaller components. Docker is used for containerization and a Makefile provides convenient management commands. The overall structure is well-organized and clear. |

-| **📖 Documentation** | The project includes documentation for each file and folder in the repository, providing relevant details about their purpose and usage. The documentation is concise, informative, and greatly contributes to the understandability of the system. |

-| **🔗 Dependencies** | The system relies on external libraries such as PyPI, OpenAI API, Streamlit, and Docker Buildx. Dependencies are managed using pip and conda. The use of these libraries enhances the functionality and efficiency of the system. |

-| **🧩 Modularity** | The codebase demonstrates a high level of modularity, with clear separation of concerns and the organization of functionality into separate files and directories. Modular components facilitate code reuse and maintainability. |

-| **✔️ Testing** | While there is no explicit mention of testing tools or strategies present in the repository, it is important to note the potential for testing these modular components. Testing the functionality and integration points, as well as the CLI tool, would enhance the reliability of the system. |

-| **⚡️ Performance** | The performance of the system, such as speed, efficiency, and resource usage, cannot be fully determined from the codebase alone. However, the use of Docker images and caching functionality indicates considerations for performance optimization. |

-| **🔐 Security** | The codebase does not reveal explicit security measures in place. Protecting against data breaches and maintaining functionality are important considerations. Security features should be implemented, such as validating user input, encrypting sensitive data, and utilizing appropriate access controls. |

-| **🔀 Version Control** | The codebase leverages Git for version control. Git enables collaborative development, code tracking, and managing changes between different versions efficiently. Using GitHub as the remote repository allows for easy collaboration and code review processes. |

-| **🔌 Integrations** | The system interacts with various external systems and services, such as GitHub, Docker registry, and the OpenAI API. These integrations provide added functionality for repository fetching, Docker image building, and natural language processing, respectively. |

-| **📶 Scalability** | While the system's scalability cannot be determined solely from the codebase, Docker containers provide a foundation for scalability. With proper container orchestration tools, the system can be horizontally scaled to handle increasing loads and increased demand over time. |

+

---

+## ⚙️ Features

-## 📂 Project Structure

-

-

-```bash

-repo

-├── CHANGELOG.md

-├── CODE_OF_CONDUCT.md

-├── CONTRIBUTING.md

-├── Dockerfile

-├── LICENSE

-├── Makefile

-├── Procfile

-├── README.md

-├── conf

-│ ├── conf.toml

-│ ├── dependency_files.toml

-│ ├── ignore_files.toml

-│ ├── language_names.toml

-│ ├── language_setup.toml

-│ └── svg

-│ ├── badges.json

-│ └── badges_compressed.json

-├── examples

-│ ├── imgs

-│ │ ├── closing.png

-│ │ ├── demo.png

-│ │ ├── features.png

-│ │ ├── getting_started.png

-│ │ ├── header.png

-│ │ ├── modules.png

-│ │ ├── overview.png

-│ │ └── tree.png

-│ ├── readme-energy-forecasting.md

-│ ├── readme-fastapi-redis.md

-│ ├── readme-go.md

-│ ├── readme-java.md

-│ ├── readme-javascript.md

-│ ├── readme-kotlin.md

-│ ├── readme-lanarky.md

-│ ├── readme-mlops.md

-│ ├── readme-pyflink.md

-│ ├── readme-python.md

-│ ├── readme-rust-c.md

-│ └── readme-typescript.md

-├── heroku.yml

-├── poetry.lock

-├── pyproject.toml

-├── readmeai

-│ ├── __init__.py

-│ ├── app

-│ │ ├── Dockerfile

-│ │ ├── __init__.py

-│ │ └── streamlit_app.py

-│ ├── builder.py

-│ ├── conf.py

-│ ├── factory.py

-│ ├── logger.py

-│ ├── main.py

-│ ├── model.py

-│ ├── parse.py

-│ ├── preprocess.py

-│ └── utils.py

-├── requirements.txt

-├── scripts

-│ ├── build_image.sh

-│ ├── clean.sh

-│ ├── run.sh

-│ ├── run_batch.sh

-│ └── test.sh

-├── setup

-│ ├── environment.yaml

-│ └── setup.sh

-└── tests

- ├── __init__.py

- ├── conftest.py

- ├── test_builder.py

- ├── test_conf.py

- ├── test_factory.py

- ├── test_logger.py

- ├── test_main.py

- ├── test_model.py

- ├── test_parse.py

- ├── test_preprocess.py

- └── test_utils.py

-

-10 directories, 70 files

-```

+

+

-| Feature | Description |

-| ---------------------- | ------------------------------------- |

-| **⚙️ Architecture** | The system follows a modular architecture, dividing functionality into smaller components. Docker is used for containerization and a Makefile provides convenient management commands. The overall structure is well-organized and clear. |

-| **📖 Documentation** | The project includes documentation for each file and folder in the repository, providing relevant details about their purpose and usage. The documentation is concise, informative, and greatly contributes to the understandability of the system. |

-| **🔗 Dependencies** | The system relies on external libraries such as PyPI, OpenAI API, Streamlit, and Docker Buildx. Dependencies are managed using pip and conda. The use of these libraries enhances the functionality and efficiency of the system. |

-| **🧩 Modularity** | The codebase demonstrates a high level of modularity, with clear separation of concerns and the organization of functionality into separate files and directories. Modular components facilitate code reuse and maintainability. |

-| **✔️ Testing** | While there is no explicit mention of testing tools or strategies present in the repository, it is important to note the potential for testing these modular components. Testing the functionality and integration points, as well as the CLI tool, would enhance the reliability of the system. |

-| **⚡️ Performance** | The performance of the system, such as speed, efficiency, and resource usage, cannot be fully determined from the codebase alone. However, the use of Docker images and caching functionality indicates considerations for performance optimization. |

-| **🔐 Security** | The codebase does not reveal explicit security measures in place. Protecting against data breaches and maintaining functionality are important considerations. Security features should be implemented, such as validating user input, encrypting sensitive data, and utilizing appropriate access controls. |

-| **🔀 Version Control** | The codebase leverages Git for version control. Git enables collaborative development, code tracking, and managing changes between different versions efficiently. Using GitHub as the remote repository allows for easy collaboration and code review processes. |

-| **🔌 Integrations** | The system interacts with various external systems and services, such as GitHub, Docker registry, and the OpenAI API. These integrations provide added functionality for repository fetching, Docker image building, and natural language processing, respectively. |

-| **📶 Scalability** | While the system's scalability cannot be determined solely from the codebase, Docker containers provide a foundation for scalability. With proper container orchestration tools, the system can be horizontally scaled to handle increasing loads and increased demand over time. |

+

---

+## ⚙️ Features

-## 📂 Project Structure

-

-

-```bash

-repo

-├── CHANGELOG.md

-├── CODE_OF_CONDUCT.md

-├── CONTRIBUTING.md

-├── Dockerfile

-├── LICENSE

-├── Makefile

-├── Procfile

-├── README.md

-├── conf

-│ ├── conf.toml

-│ ├── dependency_files.toml

-│ ├── ignore_files.toml

-│ ├── language_names.toml

-│ ├── language_setup.toml

-│ └── svg

-│ ├── badges.json

-│ └── badges_compressed.json

-├── examples

-│ ├── imgs

-│ │ ├── closing.png

-│ │ ├── demo.png

-│ │ ├── features.png

-│ │ ├── getting_started.png

-│ │ ├── header.png

-│ │ ├── modules.png

-│ │ ├── overview.png

-│ │ └── tree.png

-│ ├── readme-energy-forecasting.md

-│ ├── readme-fastapi-redis.md

-│ ├── readme-go.md

-│ ├── readme-java.md

-│ ├── readme-javascript.md

-│ ├── readme-kotlin.md

-│ ├── readme-lanarky.md

-│ ├── readme-mlops.md

-│ ├── readme-pyflink.md

-│ ├── readme-python.md

-│ ├── readme-rust-c.md

-│ └── readme-typescript.md

-├── heroku.yml

-├── poetry.lock

-├── pyproject.toml

-├── readmeai

-│ ├── __init__.py

-│ ├── app

-│ │ ├── Dockerfile

-│ │ ├── __init__.py

-│ │ └── streamlit_app.py

-│ ├── builder.py

-│ ├── conf.py

-│ ├── factory.py

-│ ├── logger.py

-│ ├── main.py

-│ ├── model.py

-│ ├── parse.py

-│ ├── preprocess.py

-│ └── utils.py

-├── requirements.txt

-├── scripts

-│ ├── build_image.sh

-│ ├── clean.sh

-│ ├── run.sh

-│ ├── run_batch.sh

-│ └── test.sh

-├── setup

-│ ├── environment.yaml

-│ └── setup.sh

-└── tests

- ├── __init__.py

- ├── conftest.py

- ├── test_builder.py

- ├── test_conf.py

- ├── test_factory.py

- ├── test_logger.py

- ├── test_main.py

- ├── test_model.py

- ├── test_parse.py

- ├── test_preprocess.py

- └── test_utils.py

-

-10 directories, 70 files

-```

+ +

+  +

+  +

+  +

+  +

+  +

+