This document details how to install Netdata on an existing Kubernetes (k8s) cluster. By following these directions, you will use Netdata's Helm chart to bootstrap a Netdata deployment on your cluster. The Helm chart installs one parent pod for storing metrics and managing alarm notifications plus an additional child pod for every node in the cluster.

Each child pod will collect metrics from the node it runs on, in addition to compatible applications, plus any endpoints covered by our generic Prometheus collector, via service discovery. Each child pod will also collect cgroups, Kubelet, and kube-proxy metrics from its node.

To install Netdata on a Kubernetes cluster, you need:

- A working cluster running Kubernetes v1.9 or newer.

- The kubectl command line tool, within one minor version difference of your cluster, on an administrative system.

- The Helm package manager v3.0.0 or newer on the same administrative system.

The default configuration creates one parent pod, installed on one of your cluster's nodes, and a DaemonSet for

additional child pods. This DaemonSet ensures that every node in your k8s cluster also runs a child pod, including

the node that also runs parent. The child pods collect metrics and stream the information to the parent pod, which

uses two persistent volumes to store metrics and alarms. The parent pod also handles alarm notifications and enables

the Netdata dashboard using an ingress controller.

We recommend you install the Helm chart using our Helm repository. In the helm install command, replace netdata with

the release name of your choice.

helm repo add netdata https://netdata.github.io/helmchart/

helm install netdata netdata/netdataYou can also install the Netdata Helm chart by cloning the repository and manually running Helm against the included chart.

Run kubectl get services and kubectl get pods to confirm that your cluster now runs a netdata service, one

parent pod, and three child pods.

You've now installed Netdata on your Kubernetes cluster. See how to access the Netdata dashboard to confirm it's working as expected, or see the next section to configure the Helm chart to suit your cluster's particular setup.

Read up on the various configuration options in the Helm chart documentation to see if you need to change any of the options based on your cluster's setup.

To change a setting, use the --set or --values arguments with helm install, for the initial deployment, or helm upgrade to upgrade an existing deployment.

helm install --set a.b.c=xyz netdata netdata/netdata

helm upgrade --set a.b.c=xyz netdata netdata/netdataFor example, to change the size of the persistent metrics volume on the parent node:

helm install --set parent.database.volumesize=4Gi netdata netdata/netdata

helm upgrade --set parent.database.volumesize=4Gi netdata netdata/netdataAs mentioned in the introduction, Netdata has a service discovery plugin to identify compatible pods and collect metrics from the service they run. The Netdata Helm chart installs this service discovery plugin into your k8s cluster.

Service discovery scans your cluster for pods exposed on certain ports and with certain image names. By default, it looks for its supported services on the ports they most commonly listen on, and using default image names. Service discovery currently supports popular applications, plus any endpoints covered by our generic Prometheus collector.

If you haven't changed listening ports, image names, or other defaults, service discovery should find your pods, create the proper configurations based on the service that pod runs, and begin monitoring them immediately after depolyment.

However, if you have changed some of these defaults, you need to copy a file from the Netdata Helm chart repository,

make your edits, and pass the changed file to helm install/helm upgrade.

First, copy the file to your administrative system.

curl https://raw.githubusercontent.com/netdata/helmchart/master/charts/netdata/sdconfig/child.yml -o child.ymlEdit the new child.yml file according to your needs. See the Helm chart

configuration and the file itself for details.

You can then run helm install/helm upgrade with the --set-file argument to use your configured child.yml file

instead of the default, changing the path if you copied it elsewhere.

helm install --set-file sd.child.configmap.from.value=./child.yml netdata netdata/netdata

helm upgrade --set-file sd.child.configmap.from.value=./child.yml netdata netdata/netdataYour configured service discovery is now pushed to your cluster.

Accessing the Netdata dashboard itself depends on how you set up your k8s cluster and the Netdata Helm chart. If you

installed the Helm chart with the default service.type=ClusterIP, you will need to forward a port to the parent pod.

kubectl port-forward netdata-parent-0 19999:19999 You can now access the dashboard at http://CLUSTER:19999, replacing CLUSTER with the IP address or hostname of your

k8s cluster.

If you set up the Netdata Helm chart with service.type=LoadBalancer, you can find the external IP for the load

balancer with kubectl get services, under the EXTERNAL-IP column.

kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cockroachdb ClusterIP None <none> 26257/TCP,8080/TCP 46h

cockroachdb-public ClusterIP 10.245.148.233 <none> 26257/TCP,8080/TCP 46h

kubernetes ClusterIP 10.245.0.1 <none> 443/TCP 47h

netdata LoadBalancer 10.245.160.131 203.0.113.0 19999:32231/TCP 74mIn the above example, access the dashboard by navigating to http://203.0.113.0:19999.

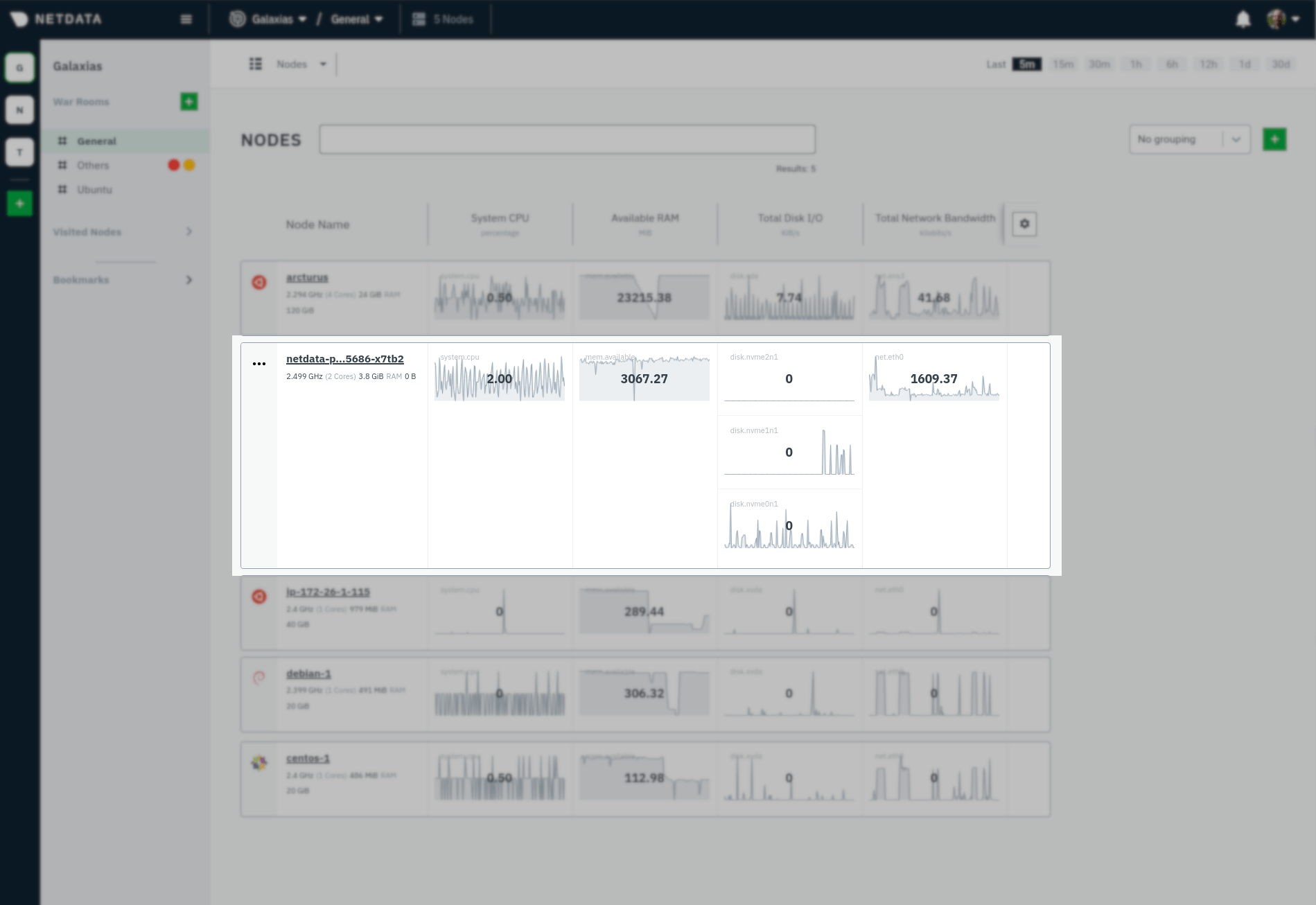

You can claim a cluster's parent Netdata pod to see its real-time metrics alongside any other nodes you monitor using Netdata Cloud.

Netdata Cloud does not currently support claiming child nodes because the Helm chart does not allocate a persistent volume for them.

Ensure persistence is enabled on the parent pod by running the following helm upgrade command.

helm upgrade \

--set parent.database.persistence=true \

--set parent.alarms.persistence=true \

netdata netdata/netdataNext, find your claiming script in Netdata Cloud by clicking on your Space's dropdown, then Manage your Space. Click the Nodes tab. Netdata Cloud shows a script similar to the following:

sudo netdata-claim.sh -token=TOKEN -rooms=ROOM1,ROOM2 -url=https://app.netdata.cloudYou will need the values of TOKEN and ROOM1,ROOM2 for the command, which sets parent.claiming.enabled,

parent.claiming.token, and parent.claiming.rooms to complete the parent pod claiming process.

Run the following helm upgrade command after replacing TOKEN and ROOM1,ROOM2 with the values found in the claiming

script from Netdata Cloud. The quotations are required.

helm upgrade \

--set parent.claiming.enabled=true \

--set parent.claiming.token="TOKEN" \

--set parent.claiming.rooms="ROOM1,ROOM2" \

netdata netdata/netdataThe cluster terminates the old parent pod and creates a new one with the proper claiming configuration. You can see your parent pod in Netdata Cloud after a few moments. You can now build new dashboards using the parent pod's metrics or run Metric Correlations to troubleshoot anomalies.

If you update the Helm chart's configuration, run helm upgrade to redeploy your Netdata service, replacing netdata

with the name of the release, if you changed it upon installtion:

helm upgrade netdata netdata/netdataRead the monitoring a Kubernetes cluster guide for details on the various metrics and charts created by the Helm chart and some best practices on real-time troubleshooting using Netdata.

Check out our Agent's getting started guide for a quick overview of Netdata's capabilities, especially if you want to change any of the configuration settings for either the parent or child nodes.

To futher configure Netdata for your cluster, see our Helm chart repository and the service discovery repository.